Data Center Heat Energy Re-Use Part 3a: Hot Water Cooling21 min read

In this series we are exploring the different ways that data center operators attempt to be responsible global citizens while assuring long-term return on assets by reducing their carbon footprint through capturing and re-using the heat energy produced by their ICT equipment. I have taken for my conversation starter an October 2011 MIT Technology Review article by Neil Savage, “Greenhouse Effect: Five Ideas for Re-using Data Centers’ Waste Heat.” The five examples he cites in this article actually represent five general strategies and therefore I find them to be a useful jumping off point for exploring developments over the subsequent nine years. The ideas were:

Notre Dame University data center heated a greenhouse.

A Syracuse University data center produced its own electricity and used excess cold water for air conditioning an adjacent office building in summer and excess hot water to heat it during the winter

An IBM research data center in Zurich used warm water liquid cooling and used the warmer “return” water for heating an adjacent lab.

Oak Ridge National Laboratory developed a mechanism that affixed to a microprocessor and produced electricity.

A Telecity data center in Paris provided heat for research experiments on the effects of climate change.

In part one, we looked at variations on the Notre Dame University use of data center waste hot air to maintain an adjacent greenhouse through those northern Indiana winters. While we covered several different example cases of hot air re-use, in general the low grade energy of 80-95˚F air and the requirement that the application be essentially adjacent to the data center presented reasonable obstacles to attractive ROI. In reviewing the use of 80˚F waste air from a UPS room to reduce the lift on generator block heaters’ 100˚F target, we determined that a good case could be made that effective airflow management practices allowing a data center to operate closer to the ASHRAE upper recommended limit would result in waste air that could eliminate the need altogether for generator block heaters. This example addressed both the energy grade and adjacency obstacles. Otherwise, we found the most effective uses of heat energy from data center return air occurred in northern European local district heating networks and discovered that over 10% of Sweden’s heating energy comes from data centers. In fact, local heating districts in one form or another represent a useful model for effective data center energy reuse, as we will see in subsequent discussions.

I coined “tapping the loop” for the second category of data center energy re-use, wherein the supply side of the chilled water loop could be tapped for ancillary cooling and the return side could be tapped for either heating or cooling. In the University of Syracuse example from Savage’s article, the primary energy source for re-use was turbine exhaust, which was hot enough to drive absorption chillers to provide building air conditioning, which was tapped to cool the data center, or hot enough to go through a heat exchanger to heat the building during the winter. A more current shining star for “tapping the loop” is the Westin-Amazon project in Seattle, which involved a little more straightforward engineering but much more creativity in overall project management, requiring collaboration among various government agencies, public utilities, and corporations pursuing mutually beneficial self-interest. Essentially, the Amazon office buildings represent the equivalent of a local heating district “customer” for Clise Properties (the owner of the Westin Carrier Hotel), and Clise Properties and McKinstry Engineering formed an entity registered as an approved utility company. Amazon will avoid some 80 million kW hours of heating energy cost and Clise Properties will avoid expenses of running evaporation towers and expense of resultant water loss. While the Westin-Amazon model to me represents the perfect blueprint for an effective tapping the loop data center energy re-use project, a review of a similar project cancelled at Massachusetts Institute of Technology revealed the complexities of trying to herd all the cats for such an endeavor, which we will see again in this third part of the series.

The third category of data center heat energy re-use from the MIT Technology Review is hot water cooling, which can benefit either of the first two categories, but is particularly beneficial with data center liquid cooling (which is finally gaining some meaningful traction in our industry). As previously mentioned, if data center waste air is being used to facilitate generator starters, raising supply air from 65˚F or 70˚F up to 78-80˚F will produce a return air temperature high enough to eliminate block heaters. Furthermore, in the Westin-Amazon project, a good data center airflow containment execution could allow the data center water supply to the utility heat exchanger to be increased enough to reduce the heat recovery plant lift by 28%. In neither of these cases are we talking about cooling with warm or hot water, but even moving the needle these small steps can produce significant benefits. When we start working with hot water, we get higher grade waste heat energy and water is easier to move around than air.

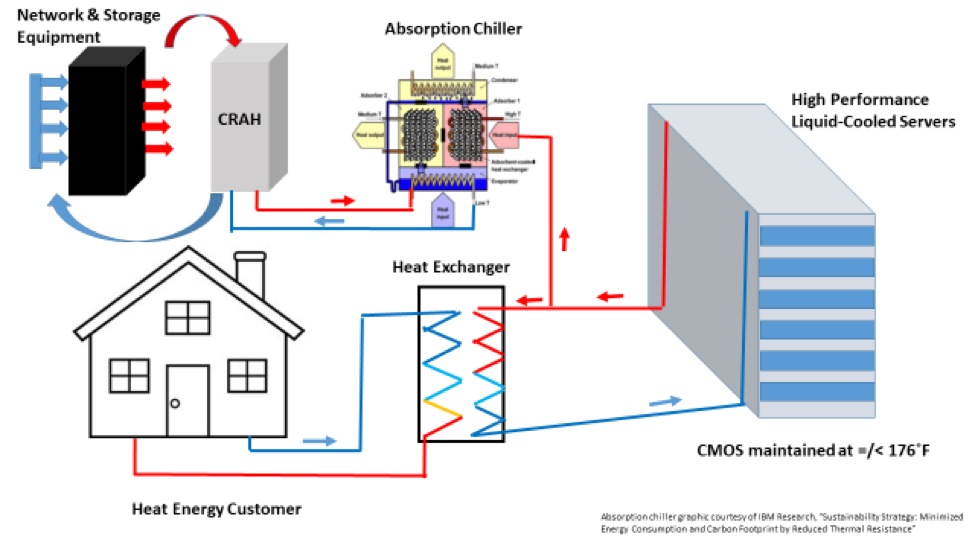

The IBM proof-of-concept data center at the Zurich Research Laboratory took advantage of innovations in direct contact liquid cooling whereby hot water was pumped through copper microchannels attached to computer chips. They found that 140˚F supply water maintained chip temperatures around 176˚F, safely below the recommended 185˚F maximum. This hot water cooling resulted in a post-process “return” temperature of 149˚F, which was an adequate grade heat energy for both building heating and cooling through an absorption chiller, without requiring a boost from heat pumps. In addition to providing heat for an adjacent lab, the absorption chiller provided 49kW of cooling capacity at about 70˚F. A simplified overview of this approach is illustrated in Figure 1 below.

Figure 1: Simplified Flow of Data Center Liquid Cooling Energy Re-use

Around the same time that the IBM proof of concept hot water liquid cooling experiment was being implemented in Switzerland, eBay was experimenting with warm water cooling in Phoenix in the well-publicized Mercury Project. The Mercury Project involved one part of the data center cooled by chilled water loop connected to chillers and then a second data center using condenser return water from the first data center up to 87˚F to supply rack-mounted rear door heat exchangers. Obviously, temperatures exceeded ASHRAE recommended server inlet air temperatures, but remained within the Class A2 allowable range. It was within this operation that Dean Nelson and his team came up with a business mission-based data center efficiency metric tying data center costs to customer sales transactions, thereby giving form to that illusive tipping point between data center efficiency and effectiveness. In this case, the “customer” was internal and the waste heat was not used as a heat energy source but as a cooling source.

The Project Mercury model does, in fact, offer a vision for low risk warm water cooling that could be available to many data centers without having to transition all the way to some form of direct contact liquid cooling. For example, data centers using rear door heat exchangers can operate with supply temperatures north of 65˚F, which easily exceeds the return temperature of a building comfort cooling return water loop. Tapping into the return water is essentially free cooling and then during the time of year when the building AC might not be running continuously (or at all, my friends in Minnesota), the rear door heat exchangers can be supplied through a free cooling heat exchanger economizer. The same principle applies to direct contact liquid cooling, which should be essentially free to operate in any facility with any meaningful-size comfort cooling load.

More recently, IBM Zurich has translated the proof-of-concept into a full production super computer in Zurich (LRZ SuperMUC-NG), with a parallel project in Oak Ridge, Tennessee. Bruno Michel, Manager of Smart System Integration at the Zurich labs claims the production super computer is actually a negative emissions facility because all the ICT equipment is powered by renewable energy and then the heating and cooling produced by the data center represents emission avoidance. The temperature profile of the different steps in the process in Figure 1 will vary depending on the customer situation and requirements. For example, to provide cooling to the network and storage equipment during warmer weather when free cooling is not available and to provide useable heat energy to district heating networks during cooler weather, the data center runs at 149˚F. To provide floor heating to residential customers, it can drop down to 131˚F and to support free cooling at Oak Ridge they will operate at 113˚F. The Fahrenheit absorption chiller operates with a 127˚F drive temperature to deliver 68˚F chilled water to the cooling units serving storage and network equipment, with a total cooling capacity of 608kW.

The IBM project depends on breakthrough innovation in reducing thermal resistance, thereby allowing higher water temperature at the chip, resulting in actual overall chip performance improvement. Nevertheless, any of the various direct contact liquid cooling solutions available on the market today can deliver some significant portion of the benefits of hot water cooling. They all make their own claims on how hot the “cooling” supply water can be to maintain adequate chip temperatures and even improve chip performance over traditional air cooling. Even when these temperatures may not be high enough to directly replace traditional heating sources (boilers, etc.) or drive absorption chillers, they are still high enough to dramatically reduce the lift required on heat pumps to raise that heat to a useful level. Furthermore, at liquid cooling temperatures, there should be no need for chillers or mechanical cooling. Next time we will look at some of the investment and operational cost trade-offs associated with harvesting the benefits of hot water cooling and some of the larger societal and infrastructure challenges.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor,

manage, and maximize the power and cooling infrastructure for critical

data center environments.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor, manage, and maximize the power and cooling infrastructure for critical data center environments.

Ian Seaton

Data Center Consultant

Let's keep in touch!

Airflow Management Awareness Month 2020

We’re hosting four free educational webinars throughout the month of June. Don’t miss them!

0 Comments