Data Center Heat Energy Re-Use Part 2: Tap the Chilled Water Loop23 min read

In this series we are exploring the different ways that data centers attempt to be responsible global citizens by reducing their carbon footprint through capturing and re-using the heat energy produced by their ICT equipment. I have taken for my launch pad an October 2011 MIT Technology Review article by Neil Savage, “Greenhouse Effect: Five Ideas for Re-using Data Centers’ Waste Heat.” The five examples he cites in this article actually represent five general strategies and therefore I find them to be a useful jumping off point for exploring developments over the subsequent eight and a half years. The ideas were:

Notre Dame University data center heated a greenhouse.

A Syracuse University data center produced its own electricity and used excess cold water for air conditioning an adjacent office building in summer and excess hot water to heat it during the winter

An IBM research data center in Zurich used warm water liquid cooling and used the warmer “return” water for heating an adjacent lab.

Oak Ridge National Laboratory developed a mechanism that affixed to a microprocessor and produced electricity.

A Telecity data center in Paris provided heat for research experiments on the effects of climate change.

In part one, we looked at what could be done with the hot air otherwise cycled through the data center cooling return air. While shipping the hot air next door may on the surface seem like the most straightforward path to putting it to good use, we determined that this approach had some rather obvious obstacles to producing an attractive ROI and acceptably short pay-back period. The heat energy is generally low-grade energy resulting from temperatures ranging from 80˚-95˚F. Air does not ship easily (Amazon prefers to ship your air mattress deflated), so the point of use needs to be essentially adjacent to the data center.

Besides the Notre Dame greenhouse, there are numerous other examples of data centers providing heat to adjacent offices and laboratories and we did learn about Sabey’s use of 80˚F waste air from the UPS room to reduce the lift on their generator block heaters’ 100˚F target. John Sasser of Sabey acknowledged that if the data center supply air were maintained at or near the 80˚F ASHRAE recommended maximum thereby producing a return air temperature in the neighborhood of 100˚-110˚F (assuming excellent airflow management practices), then the data center could maintain the generator oil and coolant temperatures without the need for any block heaters. His problem is that the operational parameters are determined by the tenants, and they typically want SLAs of lower temperatures. Nevertheless, for a data center in which the owner/operator controls his own destiny, using the data center waste air to replace generator block heaters may be a viable approach and that ROI suddenly gets very attractive. Otherwise, we found the most effective uses of heat energy from data center return air occurred in northern Europe where the energy was transferred from air to water, amplified and deployed through local district heating networks. The presence of local district heating along with generally high electric utility costs have allowed these data center quasi-utilities to become profit centers in their own right to the point where over 10% of Sweden’s heating energy comes from data centers.

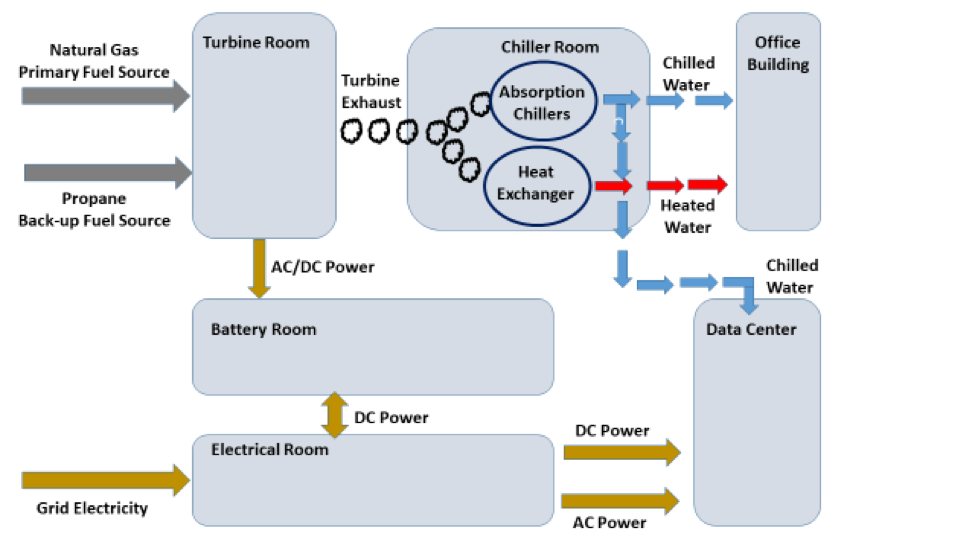

The second category of data center energy recapture I will call “tapping the loop”, i.e. the chilled water loop. Chilled water loop may occasionally be somewhat of a misnomer. We may tap it on the supply side (chilled) or on the return side (warm, or at least less chilled). In the University of Syracuse example from Savage’s article, part of that water loop can be downright hot. Figure 1 below provides a simplified overview of the University of Syracuse’s data center and supporting electrical and mechanical infrastructure.

Figure 1: University of Syracuse Trigeneration Data Center

This example had several unique and innovative features for a decade-old deployment and actually represented a proof-of-concept collaboration between the university and IBM. First, primary power was generated on site by a roomful of natural gas-powered turbines. The electrical grid connection was purely as a back up to the back, i.e., there was N+2 turbine redundancy and then there was a propane reserve back-up to the liquid natural gas source and then full UPS battery back-up, charged by the turbine output. The connection to the electrical grid was intended for use only after the upstream four levels had either failed or been exhausted. The turbines produced 585˚F exhaust air which was put to work in two separate operations in the chiller room: 1) Provide input to absorption chillers with capacity to provide cooling to the data center as well as supply the air conditioning chilled water loop for a large office building nearby and 2) provide the heat to an air-to-liquid heat exchanger to deliver heat to that same office building during the winter. Roger Schmidt, IBM Scientist Emeritus who was the IBM Technical Lead on this project, informed me that after ten years the turbines had worn out and the cost of electricity was so low that the university has since converted this data center over to a traditional chiller and cooling tower mechanical infrastructure. Nevertheless, this facility had a good run and provided impetus for explorations in the benefits of loop tapping.

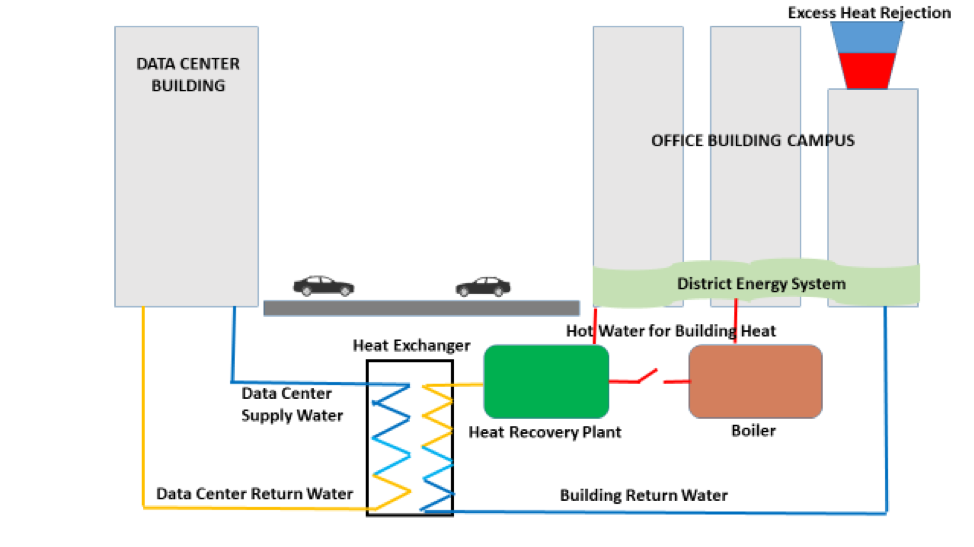

A more current, easier-to-diagram and still-operating example of productive loop-tapping can be demonstrated in the Westin-Amazon cooperative project in Seattle. Figure 2 below dramatically over-simplifies what they accomplished with this project, but it illustrates the basic building blocks into which all the detailed engineering is applied. In this neatly symbiotic relationship, the Amazon campus is essentially an economizer and condenser for the Westin Carrier Hotel and the Westin’s thirty four floors of data center essentially comprise a private utility providing heating energy to the Amazon campus. In this case, Amazon taps the return water loop from the Westin’s data center cooling and the Westin taps the return water loop from Amazon’s heating.

Figure 2: Simplified Model of Westin-Amazon Utility District

The basic elements of this installation are not particularly innovative and have been employed in other places (data center as well as industrial) around the world over the years. Heat produced by the data center is captured on cooling coils and removed by water that is pumped through a heat exchanger under the street between the two sets of structures. Water passing through the other side of the heat exchanger has its temperature raised, but not raised high enough to be effective in providing comfort heating. Heat pumps with a coefficient of performance around 4 increase the temperature of the water to 120˚-130˚F, thereby economically generating a viable heat source for all the buildings of the Amazon campus. Boilers are in place in case the data centers do not produce adequate heat but, according to Jeff Sloan, McKinstry engineer who orchestrated this project, Amazon typically consumes only a small portion of the data center waste heat and the boilers have rarely operated since everything got up and operational. Once the heat is removed from the water to provide comfort heating in the office buildings and the associated biosphere dome, the return water from Amazon cools the data across the street. McKinstry estimates Amazon should save around 80 million kW hours of electricity over the first twenty five years, roughly equivalent to eliminating carbon emissions of sixty five million pounds of coal. Clise Properties, on the other hand, saves water from tower evaporation loss and saves operating expense of running cooling towers. Jeff Sloan estimates about $985,000 annual savings for five million square feet of office space, not a huge number for a corporation this size, but not a bad number considering the low Pacific Northwest utility costs.

Actual implementation of the Westin-Amazon project belies the simplicity of Figure 2. The most significant complication was that the heat producer and the heat consumer were different entities, and separated by a public street in a major urban center. Developing an approved construction plan required close cooperation between Clise Properties, McKinstry, Amazon, Seattle, and Seattle City Light. One unique aspect of selling the heat to another company is the requirement to be an approved utility company to be in that business, so McKinstry and Clise Properties formed Eco District Limited Liability Company to get utility commission approval to be in the energy selling business. Most states are going to require something similar, with rather widely ranging degrees of bureaucratic difficulty. For example, I have to assume that it is a relatively simple process in my home state of Texas since there appear to be dozens of utility companies all buying and reselling the same ONCOR electricity with all variety of creative ways to create the appearance of meaningful pricing advantages. Such a project requires passionate persistence and creative tenacity. Clise Properties president Richard Stevenson and McKinstry’s Jeff Sloan, in this case provided the passionate persistence and the Amazon engineers provided the creative tenacity in overcoming various technical obstacles with solid engineering.

Interestingly, Richard Stevenson described the overall design as a “district energy system.” If you recall the previous piece in this series, local heating districts in northern Europe were the market condition that enabled data centers to become major utility partners for residential and commercial heating. In fact, the Amazon “district” is larger than many of the community districts spread throughout Scandanavia, which would suggest to me that there should be more opportunities around the U.S. for such installations, beyond the more obvious Boston where some heat district networks continue to exist. The opportunities could be even greater for global colocation companies. When the weather starts to get too warm for a data center utility to be “profitable,” the workload could merely be shifted to the other side of the equator where it could continue creating another revenue stream.

This idea of tapping the loop is not all that easy to say and even then it is easier said than done without a more or less perfect confluence of circumstances and passionate perseverance. A case in point was reported to me by Chris Hill, Director of Research Computing at Massachusetts Institute of Technology where they had planned to build a data center with canal water cooling and waste heat re-use. They were unable to overcome all the permitting obstacles to scavenge canal water and the laws of thermodynamics were just a little too overwhelming for the low grade heat to be put to any profitable use. They nevertheless ended up with a highly efficient facility which we will cover in a later piece.

Finally, I should not close this particular segment out without at least a quick reference to my primary area of interest – airflow management. The Westin Carrier Hotel is delivering 65˚F return water to the utility district heat exchanger which, according to the information I saw, is then delivering 60˚F water to the heat recovery plant. In my old data center lab with excellent airflow management, I was getting 65˚F cooling water from my chiller to produce 75˚F supply air. The airflow containment accessories and infrastructure maintained all server inlet temperatures at 78˚F or lower, even with 30kW cabinets mixed in some of the rows. Depending on the type of servers we were testing, our return air could vary anywhere from 97˚F up to 110˚F and our return water temperature off our cooling coils therefore ranged from 78˚F up into the low 80s. With this kind of performance from a consistently applied and maintained airflow management program, the lift required of the heat recovery plant would be reduced by over 28%, resulting in further profits for the data center utility or further savings for the utility’s customer. I would hope that lessons learned from the Westin-Seattle-Amazon collaboration along with moving the needle with improved airflow management might inspire another round of loop-tapped data center utilities.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor,

manage, and maximize the power and cooling infrastructure for critical

data center environments.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor, manage, and maximize the power and cooling infrastructure for critical data center environments.

Ian Seaton

Data Center Consultant

Let's keep in touch!

Airflow Management Awareness Month 2019

Did you miss this year’s live webinars? Watch them on-demand now!

0 Comments