How Computer Chips Are Being Upgraded to Serve AI Workloads in Data Centers17 min read

A lot is going on concerning chip development. Nvidia, AMD, Intel and others are working on designs to facilitate greater AI capabilities. It is all about packing more power into smaller spaces to provide abundant processing power.

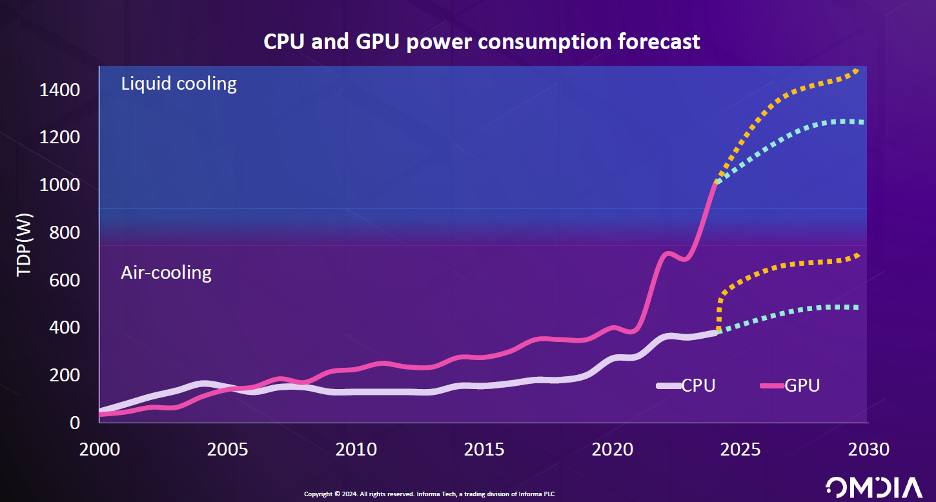

There is a metric known as thermal design power (TDP) which measures the number of watts per processor. According to projections from Omdia, the TDP explosion has begun. From 200-300 TDP for CPUs and GPUs a year or two ago, it is heading to 1000 watts and beyond within a few years.

“TDP has been spiking since 2020 so we need to rethink the cooling roadmap by incorporating liquid,” said Mohammad Tradat, Ph.D, Manager of Data Center Mechanical Engineering at Nvidia.

His company has developed a new GPU that can consume 138 kW in a single rack. The Nvidia H100 Tensor Core GPU is part of the company’s DGX SuperPOD platform that is designed to maximize AI throughput.

Chip power needs are growing almost exponentially. Courtesy of Omdia.

Intel, too, is innovating to meet the needs of AI. The company’s new Intel Gaudi 3 chip is a potential rival to Nvidia’s H100 processor. Dev Kulkarni Ph.D, Senior Principal Engineer and Thermal Architect at Intel, claims it is 40% more power efficient and 1.7X faster in the training large language models (LLMs) that sit at the heart of generative AI applications like ChatGPT.

“We can pack 1000 watts or more in a small area which makes cooling challenging,” said Kulkarni.

Vlad Galabov, Head of Omdia’s data center practice, said the die size of CPUs has risen by 100 times since the 1970s and processors are now 7.6x larger and require 4.6X more power compared to the state-of-the-art in 2000. He predicts the trend to accelerate: That the number of cores in a processor will arrive at 288 by the end of 2024, 10 times more than in 2017. Software optimization, too, will lead to customized processors tailored to AI applications that enable another wave of server consolidation.

Despite its already immense impact on data center power consumption, generative AI is only at the early adopter stage. As well as research into bigger, better, faster and more core-dense chips, there is also work ongoing to streamline AI efficiency to make it easier to function with CPUs rather than GPUs. A company known as ThirdAI pre-trained its Bolt large language model (LLM) using only 10 servers each with two Sapphire Rapids CPUs, far more efficient than GPT-2 that needed 128 GPUs. The company claims Bolt is 160 times more efficient than traditional LLMs.

Cooling and Efficiency Push

If data centers are to stay efficient and keep highly dense AI systems cool, there is a need for:

- Innovation in liquid cooling and data center efficiency

- Emphasis on the basics of data center cooling and airflow management, such as containment for hot/cold aisle separation

To facilitate innovation, the Advanced Research Projects Agency-Energy (ARPA-E) has launched the COOLERCHIPS program. It is funding research to develop transformational, highly efficient, and reliable cooling technologies that reduce total cooling energy expenditure to less than 5% of a typical data center’s IT load at any time and any U.S. location for a high-density compute system, according to Peter de Bock, Program Director for ARPA-E.

To date, progress has been made in a variety of approaches that can cool data center densities of 80kW/m3 or more. This includes 3D flow manipulation of cold plates to efficiently transfer heat, better materials for cold plates, advanced immersion cooling with more efficient fluids, and finding the optimum temperature for data centers.

“Reimagined data center architectures may enable us to not have humans in the same room as computers so we can run hotter data centers and lower energy demands,” said De Bock.

Liquid cooling will certainly play a big part. Expect plenty of advances to take place in this vibrant sector in the coming months and years. But liquid will only take us so far. It must be supported by heavy emphasis on efficiency that spans every aspect of the data center if Power Usage Effectiveness (PUE) is going to stay low. In particular, the basics of air cooling must be meticulously applied. That means bringing air cooling precisely to hot spots, paying attention to airflow to ensure there is not unwanted mixing of hot and cold air. Exhaust air from the hot aisles can’t be allowed to escape back into cold aisles. That means liberal use of blanking panels, containment systems, grommets for wiring that block the passage of air and more. All the basics need to be applied.

Simple efficiency solutions become more important than ever. After all, AI has raised the stakes. Data center processing hardware and liquid cooling systems are going to require such heavy investment that costs must be contained across the boards. The basics of air cooling and the correct channeling and containment of hot and cold air must be implemented side by side with liquid cooling. Otherwise, efficiency levels will remain low and hot spots will multiply.

“Data Center providers need to facilitate density ranges beyond the normal 10–20kW/rack to 70kW/rack and 200—300kW/rack,” said Courtney Munroe, an analyst at International Data Corp. “This will necessitate innovative cooling and heat dissipation.”

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor,

manage, and maximize the power and cooling infrastructure for critical

data center environments.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor, manage, and maximize the power and cooling infrastructure for critical data center environments.

Drew Robb

Writing and Editing Consultant and Contractor

0 Comments