How HPC is Heading Down the Data Center Food Chain18 min read

High performance computing (HPC) used to only be for supercomputing centers, high-end analytics firms, and specialized oil and gas exploration applications. But the technology is democratizing. Recent advances in processing, cooling, and data center density have driven broader HPC adoption than we’ve ever seen before.

“Historically, HPC has been the domain of national laboratories and high-end industries,” said Rob Bunger, Program Director, CTO office, Data Center segment at Schneider Electric. “Now, more companies and universities are deploying IT that would have been classified as HPC.”

As a result, many more data centers now find themselves with HPC racks. For some, it is just one solitary rack. For others it is many. Let’s take a look at this trend, and what data centers are doing to accommodate it.

Hot HPC Market

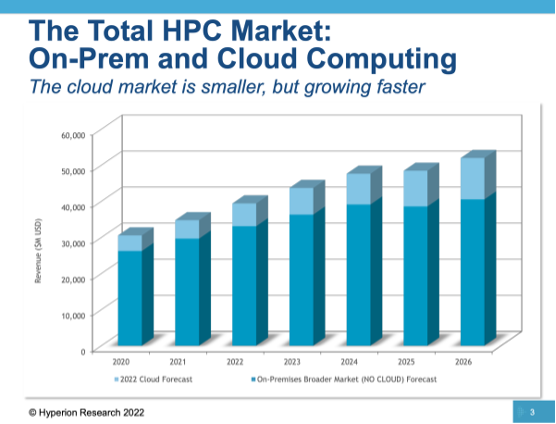

Total worldwide HPC revenues (on-premises and cloud) for 2022 were about $38.5 billion, according to Hyperion Research. That’s a rise of about 10% over 2021, and 2023 should hit about $43 billion. On-premises HPC applications continue to dominate. But cloud-based HPC is gradually gaining ground. By 2026, cloud HPC will be worth around $13 billion annually. This has been a major factor in the gradual democratization of HPC.

But this doesn’t mean supercomputers are going away as a primary HPC user. They still amount to about 50% of the overall market. But enterprises are more willing to trust others with HPC infrastructure and services via cloud deployments of the technology. And with many cloud providers now offering high-powered compute, AI, and analytics services, data centers are picking up additional business in supporting these use cases.

“Using clouds to run HPC workloads is growing between 17-to-19% a year,” said Hyperion CEO Earl Joseph.

Looking more deeply into the market, servers packed with high-powered processers such as GPUs account for half of all HPC spending. Spending on storage consumes another 20% of spending on HPC technology. That isn’t going to change anytime soon. In fact, most users surveyed by Hyperion expect storage budgets to rise by another 5% or more over the course of 2023. The dominant vendors in HPC servers are the usual suspects: HPE, Dell, and Lenovo. But others serve various needs such as either high-end supercomputing, exascale applications, or smaller, workload-specific systems. This includes the likes of Inspur, Atos, Sugon, IBM, Penguin, Fujitsu, and NEC.

“Exascale systems used in the supercomputer market are expected to maintain a growth rate of more than 16% for the next few years,” said Joseph.

HPC Drivers

Bunger of Schneider Electric listed the main drivers for HPC and higher specific power ranges per rack: IT workloads related to AI, machine learning, training, and video processing increasing the prevalence of graphic processors, as well as the price point steadily dropping for high-end CPUs and other accelerator chips.

He emphasized advances in both air and liquid cooling as playing a big part in the growth of HPC as well as in making it accessible to a larger pool of users. Air cooling, he said, can comfortably support 20kW per rack, which is considered a reasonable upper limit – although some data centers have pushed the bounds of air cooling well passed that point.

“Higher densities are being deployed via air cooling, but the effort becomes much greater,” said Bunger.

Beyond that, liquid cooling is required. Bunger has seen plenty of 50 kW HPC racks deployed with liquid cooling and those in the 100 kW per rack range and more are growing in number.

“Higher chip densities are the main driver for liquid cooling adoption, both immersion and direct to chips (d2c),” said Bunger.

He believes d2c and other forms of direct liquid cooling (DLC) have helped to take HPC to a new level of adoption. In addition, immersion cooling advances hold the potential to open the flood gates to an even wider swath of users.

“Many start-up companies are incubating different liquid cooling technologies,” said Bunger. “And highly efficient facility cooling designs are emerging with more free cooling that take advantage of the higher water temperatures of liquid cooling.”

And Then There is the Metaverse

Looking ahead, Bunger noted additional factors that are only going to drive the HPC space to further heights. The metaverse is one obvious example. Who knows what kind of revolutions in IT and HPC usage it will eventually bring.

But traditionally conservative industrial users are also beginning to realize the potential value HPC can bring to them. Digital twins serve as a case in point. Many in the energy sector, for example, are deploying vast numbers of sensors and Industrial Internet of Things (IIoT) devices in their power plants, oil and gas facilities, and in heavy equipment such as gas turbines, steam turbines, and compressors. This enables them to create virtual copies of real-world equipment and facilities. The benefit – they increase the efficiency of maintenance activities and eliminate unnecessary outages and doing away with fixed schedules for the replacement of components. Instead, they can use digital systems to track wear and tear and thus gain greater longevity of parts than before. They can also model changes using the digital twin to see how they might impact the facility or machine.

Use cases such as the digital twin and the metaverse require a lot of compute power. HPC fits the bill nicely.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor,

manage, and maximize the power and cooling infrastructure for critical

data center environments.

Real-time monitoring, data-driven optimization.

Immersive software, innovative sensors and expert thermal services to monitor, manage, and maximize the power and cooling infrastructure for critical data center environments.

Drew Robb

Writing and Editing Consultant and Contractor

0 Comments