What Happened to Promised Airflow Management Efficiency Savings? – Part 121 min read

Long-time reader Hondo Q. from data center hot bed Lihue, Kauai writes in, “What’s going on? I have plugged all the holes in my data center; I have installed blanking panels in every unused rack mount space; I have put in floor grommets in all the tile cut-out openings. I have added doors to the ends of my hot aisles. I have connected the tops of my ICT equipment cabinets to the drop ceiling and I have added plenum extensions to my cooling units. I have sealed up all the openings between my servers and the cabinet side panels. I have sealed up between the cabinets and the floor and between all the cabinets. But I have not seen any consequential drop in my electric bill. What have I missed?”

First of all, I’d like to extend a note of appreciation to Hondo for his polite discourse. Under similar circumstances, it would not be surprising to read, “Professor Seaton, I spent all this money and did not get anything for it. You are full of crap!” Second of all, I did not find a letter from Hondo in my virtual mailbox. In fact, I don’t even know if there is a Hondo in Lihue or, with all due respect to my parents’ home town, if there is even a data center there. Nevertheless, my hypothetical Hondo raises a concern I continue to hear, albeit not from my regular readers. Therefore, this is the blog that you forward to any of your data center friends who may not be a part of any of these distribution outlet communities. After all, any committed reader who has powered through the series of algorithm blogs over the previous four plus months will know that airflow management disciplines enable:

- Changes to cooling unit fan speeds

- Changes to cooling unit set points

- Changes to mechanical plant operation parameters

- Changes to free cooing access

- And most importantly, enables the data center manager to make those changes

Airflow management is a powerful data center energy-saving tool. In fact, in the ASHRAE flowcharts for optimizing set points for maximum energy efficiency, you cannot get out of the starting blocks until you can answer “yes” to the questions about the quality of your airflow management practices. So Hondo, based on your note, you have checked off all the boxes for airflow management except for correcting the airflow patterns of non-compliant switches. I am going to assume that was not an oversight and that you probably just did not have any of the typical pieces of network equipment in service with non-compliant airflow patterns.

That being the case, your airflow management has opened the door to significant data center energy savings. Nevertheless, you still need to walk through the door to take advantage of your good sense. Your blanking panels and floor grommets don’t have thumbs to manipulate thermostats and other control settings; that is what they pay you for Hondo. So the first thing you are going to want to do is to switch your set point from a return air set point to a supply air set point. I do not know where you were operating prior to implementing your airflow management initiatives, but I suspect you had a set point somewhere in the range of 68˚F to maybe 73˚F. If your goal is to keep all your ICT equipment from experiencing inlet air temperature above the ASHRAE TC9.9 recommended ceiling of 80.6˚F, you can start by making your supply air set point 75˚F. (You can fine-tune that later.) While your cooling equipment is settling into its new operating realm, let’s look at where you have been and where you arrived when you determined you were not getting the expected savings from your airflow management investment.

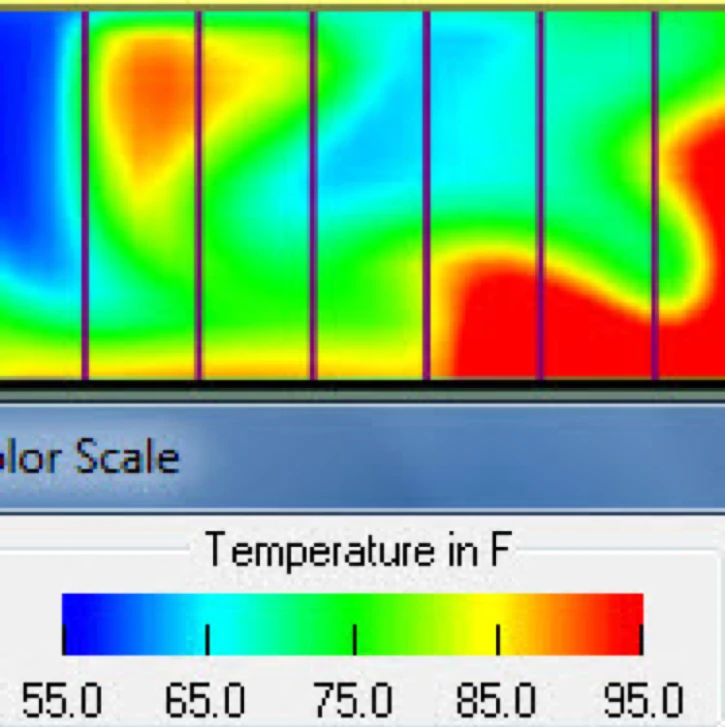

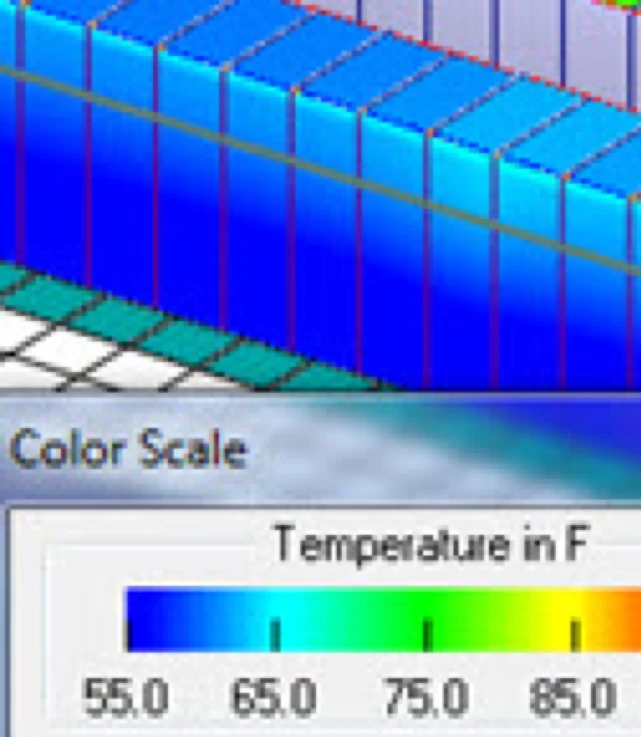

Figure 1: Server Cabinet Faces with Hot Spots

If prior to executing your airflow management project the temperature exposure of your servers looked something like the cold aisle cabinet face profile in Figure 1, then your first priority should have been to eliminate the hot spots, which you have now accomplished. After making your airflow management improvements to this data center and prior to making your set point change, those server inlet temperatures would look more like Figure 4. While your immediate response might be to start high-fiving yourself for eliminating the hot spots, I suggest you first check the temperature reading scale to note that you are almost 10˚F below the ASHRAE TC9.9 recommended minimum and that on Kauai you may be creeping into a dew point danger zone.

Figure 2: Server Cabinet Faces with Wide Temperature Variation

If, on the other hand, Hondo, your starting point looked more like Figure 2 where we have close to a 30˚F variation in server inlet temperatures but no catastrophic hot spots, then your “after” condition will still look like Figure 4. And, you will still be operating below the ASHRAE recommended minimum and you may still be flirting with dew point (i.e., humidity/condensation) issues, but you will also be over-supplying chilled air into the data center and thereby creating some bypass. Not only have you reduced the temperature of your cold aisles, but you have reduced the temperature in your hot aisles as well. Granted, that makes the hot aisles a little more comfortable for your boss on his annual February audit pilgrimage from Duluth, but more importantly you are actually reducing efficiency rather than capturing gains: the bypass airflow reduces the temperature differential across your cooling equipment coils and if you are water cooling with a chiller you have reduced the chiller efficiency with a lower approach. Don’t worry; we can fix this.

Figure 3: Server Cabinet Faces with Small Variation of Cool Temperature

Finally, Hondo, if your baseline was more like Figure 3 where you would have been mostly operating right at the bottom level of the ASHRAE TC9.9 recommended range for server inlet temperatures, then your airflow management improvements would have resulted, again, in cold aisles that looked like Figure 4. However, with a Figure 3 baseline, you can be assured that you are now blasting high volumes of bypass airflow into your cold aisles. This is where you say, “Wait a minute professor, I have just put in all this airflow management to keep my hot aisles and cold aisles isolated from each other. I’m thinking this stuff works in both directions.” You would be right, except for one small weak point in the system that only works from the cold aisle to the hot aisle: the servers themselves. Dead or out of service servers are obviously the worst offenders, but working servers also allow a significant amount of bypass airflow, particularly in highly pressurized aisles. Hondo, if your baseline looked like Figure 3, then we can be assured large volumes of supply air were being pushed through your servers in excess of the demand of their fans and associated heat loads. Chances are your hot aisles were hot in name only.

Figure 4: Server Cabinet Faces in a Cold Cold Cold Aisle

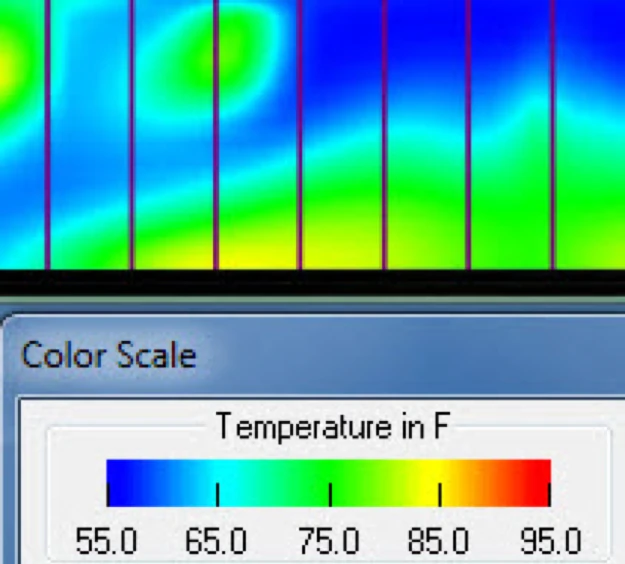

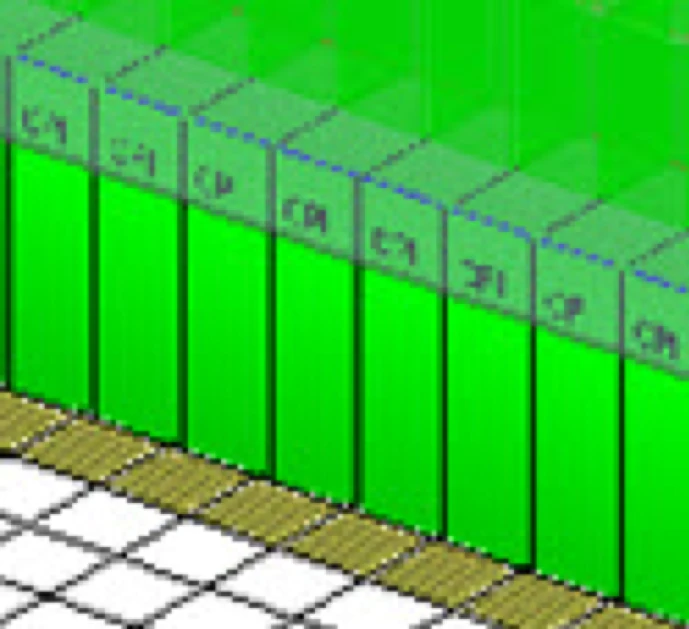

Regardless of what your baseline situation was prior to implementing airflow management best practices, you will have ended up with something resembling Figure 4. With the first intentional activity I have recommended to you – switching from a return set point to a supply set point and adjusting the supply to what you would like to see in the data center, your cold aisles should now look a lot closer to Figure 5.

Figure 5: Server Cabinet Faces after Change to Supply Air Set Point

Tune in next week for the second half of this series that will include tips and best practices for achieving airflow management efficiency savings.

The industry's easiest to install containment!

AisleLok® solutions are designed to enhance airflow management,

improve cooling efficiency and reduce energy costs.

The industry's easiest to install containment!

AisleLok® solutions are designed to enhance airflow management,

improve cooling efficiency and reduce energy costs.

Ian Seaton

Data Center Consultant

Let's keep in touch!

0 Comments