Why PUE Remains Flat and What Should Be Done About It18 min read

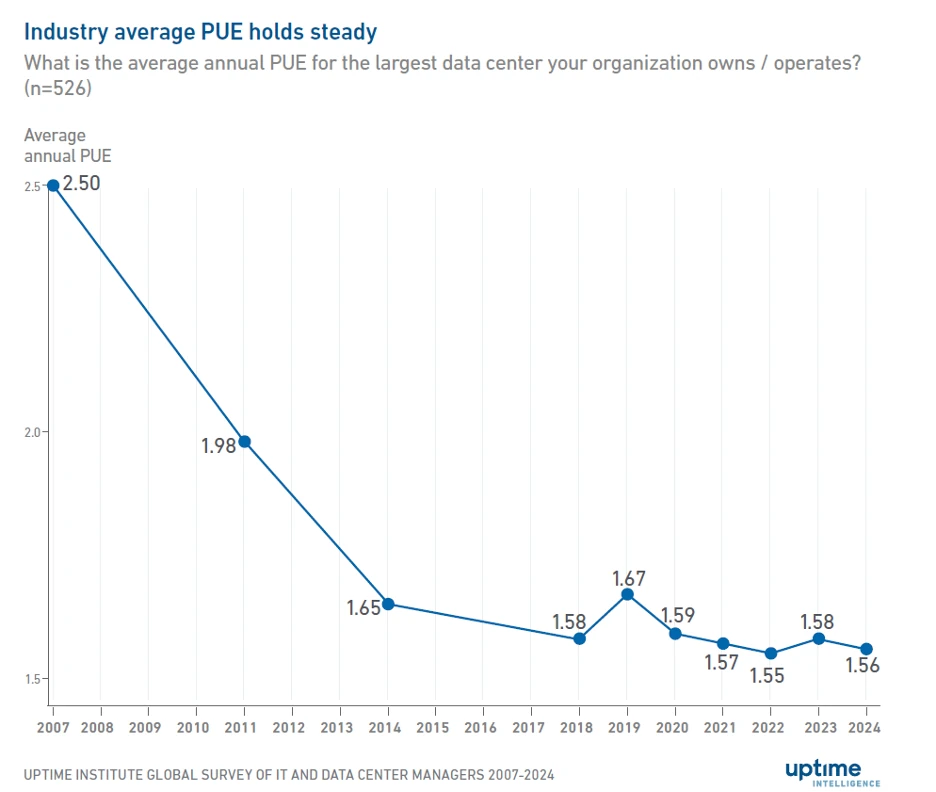

One of the most successful programs in data center efficiency has to be Power Usage Effectiveness (PUE), which was launched about 20 years ago. The idea is to have PUE as close to 1 as possible. A 1.0 rating means all of the power coming into the data center is used in running the IT equipment. Between 2007 and 2014, massive gains were made in average PUE across all data centers surveyed by the Uptime Institute. Since then, progress has been slow overall.

According to the Uptime Institute’s Global Data Center Survey 2024, average PUE levels remain mostly flat for the fifth consecutive year at 1.56. Part of the reason for this is that the initial gains in PUE were achieved by data centers addressing low-hanging fruit and more affordable measures, like blanking panels, containment systems, and variable frequency drives.

Standing in the way of further improvement are a couple of key factors. Let’s look at each.

Legacy Designs

Half of the data centers in the PUE survey are more than 11 years old. Older data centers tend to require more extensive and expensive upgrades to be able to bring PUE down to more acceptable levels. One time I visited a data center in a Denmark basement. The room ceiling consisted of a series of concrete ribs going from one end of the room to the other about three feet in height. Unfortunately, the data center aisles ran perpendicular to these concrete ribs. Result. The ceiling impeded airflow of hot and cold air. Such a facility would require a lot of work to improve PUE.

Standing in stark contrast, newer facilities and the latest designs typically eke out every inch of efficiency they can muster. Facebook’s Prineville data center in Oregon, for example, boasts a PUE of around 1.15. Prineville is an example of the modern trend toward much larger facilities. The average data center size nowadays is around 137,000 square feet. However, hyperscaler and large colocation facilities are often much larger. What appears to be the case is that you have a few massive data centers recording excellent PUE numbers and a whole lot of much smaller, older and less efficient data centers that are lowering the overall PUE average. This is changing as more state-of-the-art facilities have opened and many more are under construction. These buildings demonstrate substantial efficiency gains. Hence, Uptime Institute expects them to materially influence average PUE over the next five years. The report said: “Many recent builds consistently achieve a PUE of 1.3 — and sometimes much better. With new data center construction activity at an all-time high to meet capacity demand, Uptime Intelligence expects these more efficient facilities to lower the average PUE in the coming years as their proportion in the survey sample grows.”

Reinforce the Basics

The other factor that can play a key role in lowering PUE is a reinforcement of the basics. The initial gains in PUE were made largely by instituting measures to separate cold and hot air, implement containment solutions to keep cold air close to the front of cabinets, and other simple and inexpensive measures.

However, inspections by Upsite Technologies typically finds that the basics of airflow management are not fully applied:

Floor Tiles: Time and again, tiles in raised floor designs are found in the wrong place or with cracks, openings, and places where air can escape when it is not supposed to. Perforated tiles are sometimes found in the hot aisle. This means that cold air is being fed directly into the hot aisle and is effectively wasted. Missing tiles are common, too. Data center staff should inspect the state of their tiles, fix or replace those that are cracked or broken and ensure the correct tiles are in the correct aisle. Further, sealing tape should be used to seal openings.

Cable Openings: Many formerly efficient data centers have had their PUE wrecked by poor cable management. In the rush to install equipment, a hole is punched through a tile or rack panel. This provides a channel for air to flow to where it isn’t supposed to. Data center personnel are advised to inspect every aisle and every rack to look for such channels as every single point of mixing air lowers efficiency and increases energy consumption. Each point of cable penetration should be repaired by use of grommets that allow cable penetration while preventing air from escaping.

Open Spaces in Racks; Check the front of racks for missing servers and open spaces. Fill these with blanking panels to prevent waste of air-conditioned air. It is estimated that up to half the air coming into the facility can be wasted in some data centers due to the many gaps that exist in the front of racks.

Containment: Aisle containment is a little more expensive than sealing tape, grommets, floor tiles, and blanking panels. But it is a smart investment. By portioning the aisles, you separate the cold supply air from the hot exhaust by using large panels that surround and enclose the racks. Even those that implemented containment are advised to inspect them to detect any holes due to cables or damage. Doorways are often places where air escapes. Replacing leaky doors should also be considered.

Conclusion

New data centers can be designed to achieve the highest levels of efficiency. Where the resources exist, older data centers can be redesigned and reconfigured to improve their PUE. Where budgets are tight or PUE remains difficult to improve, thorough inspection of the above basics is likely to reveal a great many simple and inexpensive ways to improve PUE further.

The industry's easiest to install containment!

AisleLok® solutions are designed to enhance airflow management,

improve cooling efficiency and reduce energy costs.

The industry's easiest to install containment!

AisleLok® solutions are designed to enhance airflow management,

improve cooling efficiency and reduce energy costs.

Drew Robb

Writing and Editing Consultant and Contractor

0 Comments